Redfield Sketch Master, aka Sketchmaster, now in 2018 (v19.01, January 2019). Who knew?

* Still affordable at $40.

* Windows only. I expect it would not be flummoxed by anything back to Windows XP, but that’s just my guess.

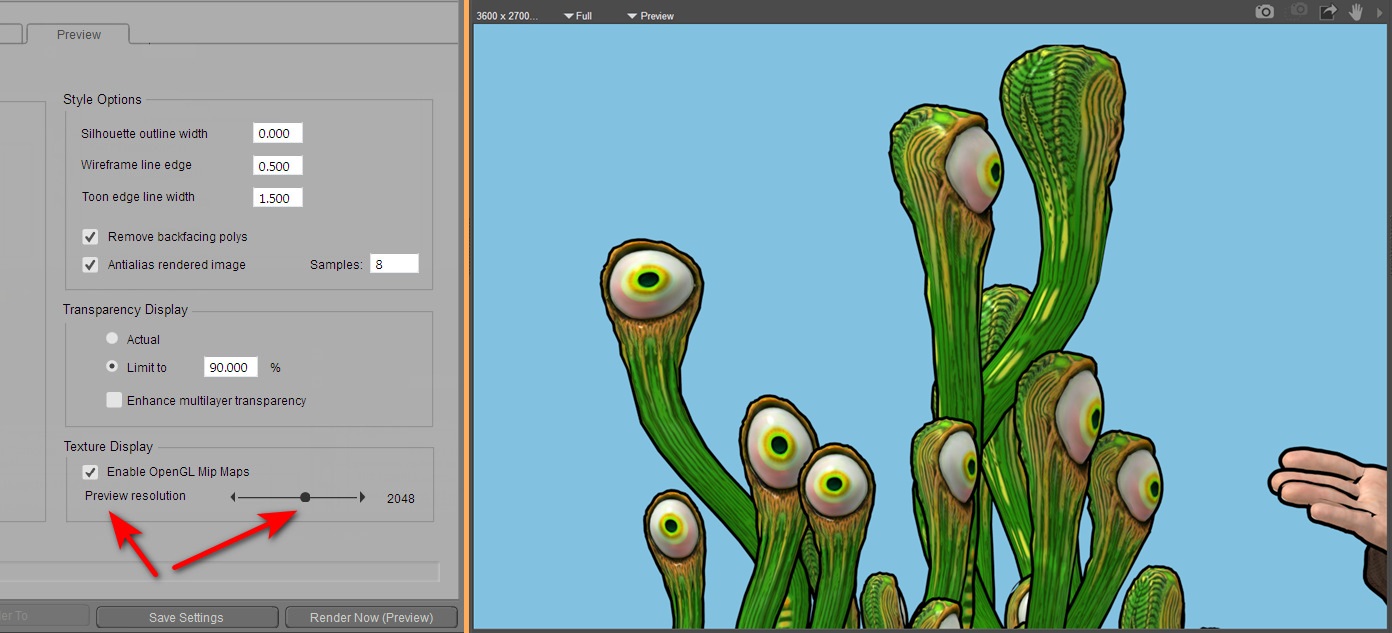

* Now multi-core and with a far bigger preview window (and thus slower than before for previews, about on a par with G’MIC). But much faster at 1000px to actually render a preset to your final image, due to the multi-core support. Not so much faster at 3600px, though… maybe 40% faster. But worth having.

* Ships with wholly new presets. Also appears to have a different or more advanced or canvas-scaled engine, but keeps a very similar UI.

* Installs and runs alongside your old 3.3x version. Does not inherit the old custom presets.

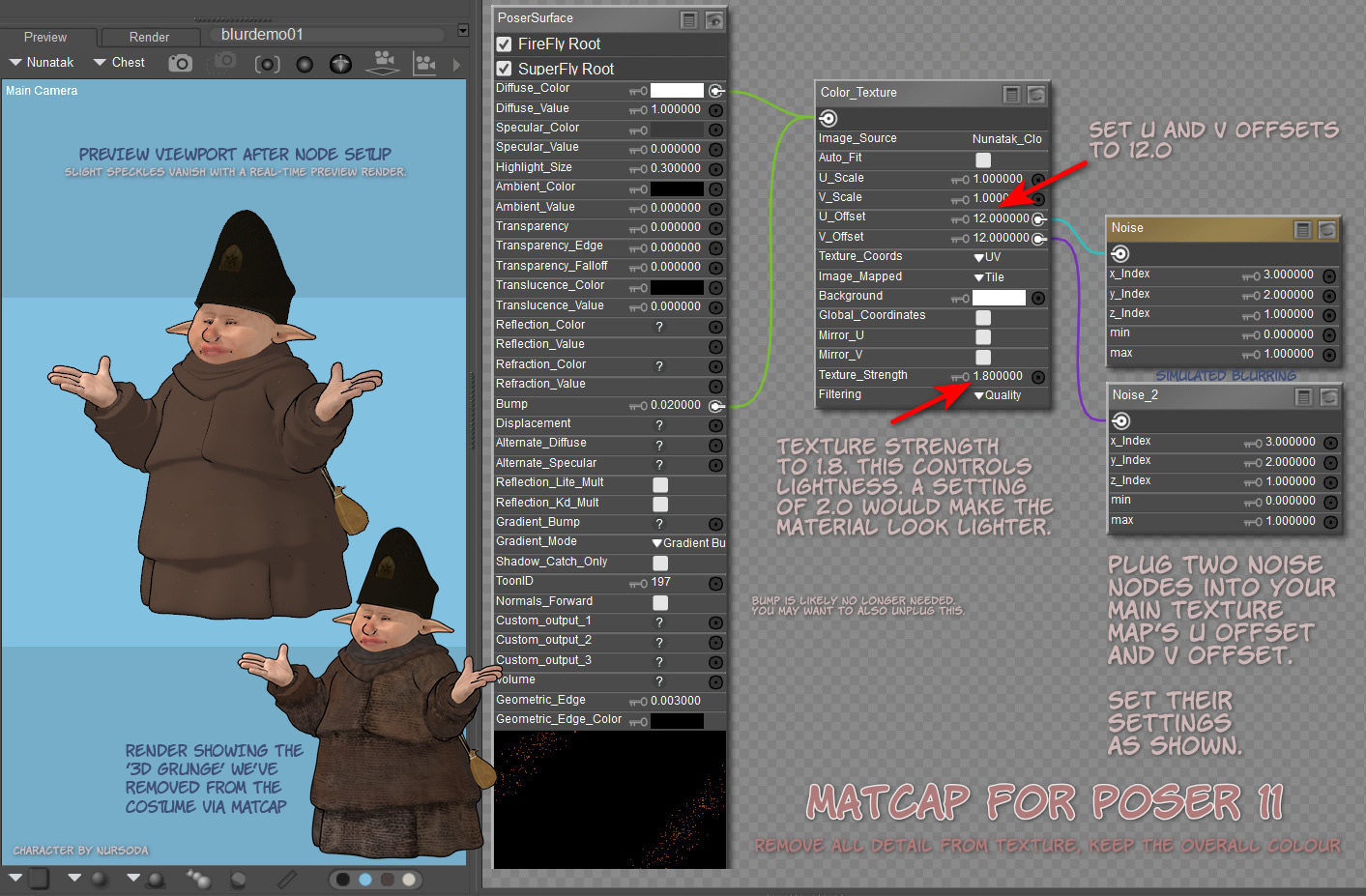

* The new version’s engine is no good for humanising 3D line-art, and for that you need the old 3.3x.

* Requires standard 8-bit images (“Last Draw” save-renders from DAZ are 16-bit, and thus require downsampling before filtering).

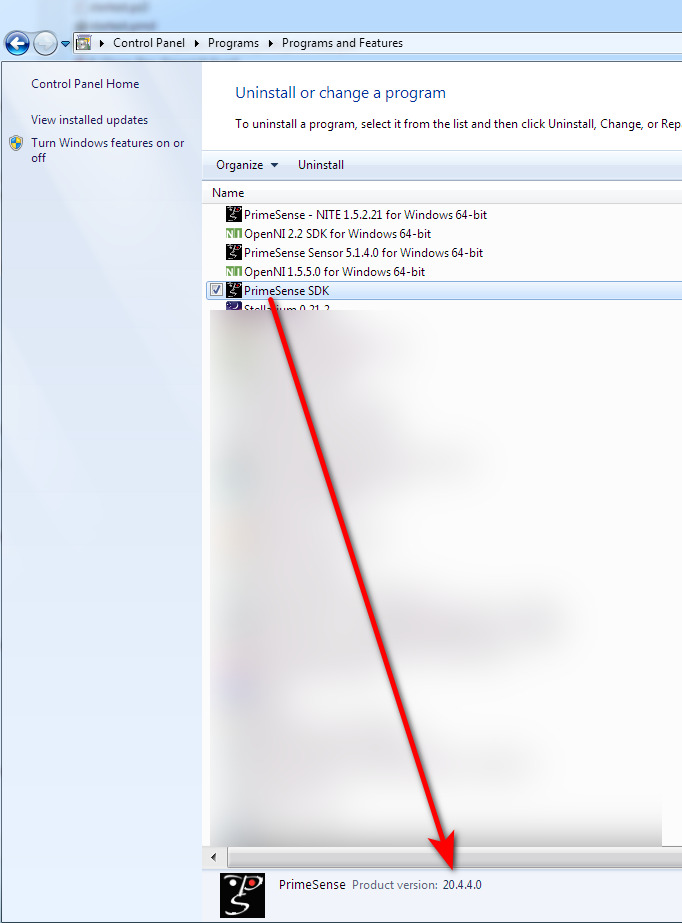

* Backup and restore of your saved custom presets is done by the same method. They’re stored in the registry, so you save out a Windows .reg file, thus…

If you’re upgrading your PC to a new one, or a new OS, this is how you transfer your Sketch Master presets.