From the big-name Stability.ai, the release of Stable Audio Open… “an open source text-to-audio model for generating up to 47 seconds of samples and sound effects”. Potentially useful for makers who require sound FX or short loops that can be guaranteed to be pass copyright checks on YouTube, Rumble etc.

Category Archives: Automation

DiffusionLight

DiffusionLight. An AI looks at a 2D photo scene, and then it makes the HDR lighting ball (aka ‘light probe’) that would have produced the scene’s lighting. Using this new light probe, you can then seamlessly render a 3D figure against the backplate photo. The sort of thing that should be in Poser 14, I’d suggest.

How to make a quick real-time z-depth (“depth cued”) render from Poser

How to make a quick real-time z-depth (“depth cued”) render from Poser.

The result:

How it’s done:

1. Save the Poser scene file you’re working on.

2. Turn on the Smooth Shaded display.

3. Turn on the Depth Cueing.

4. Delete all lights from the scene.

5. Make the background white.

6. Make a real-time Preview render, save it as a .JPG file.

7. Revert to the last saved file, undoing the changes.

Ok, that probably doesn’t sound all that quick. But here’s my Python script that automates all this grunt-work.

You need to make a one-time edit to the file path, in the script. Just indicate where you want rendered images to be saved. Make this simple change, save as a .PY file and then add to the C:\Program Files\Poser Software\Poser 13\Runtime\Python\poserScripts\ScriptsMenu folder. Restart Poser, and you’re ready for real-time depth map renders of your scene.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 |

# Make a quick depth-qued auto-render from Poser. # Version 1.0 - December 2023. Tested in Poser 11 and 13. # With thanks to bwldrd for the 'SetBackgroundColor' tip. # From a saved Poser scene, auto render a quick real-time depth-map # as a .JPG on white, then revert the scene to the last saved scene file. # NOTE: The user will needs to change the output directory 'dirPath=' to their chosen path. import poser import datetime # Set up the saving and timestamp parameters. Change the save directory to suit your needs. # Remember that the \\ double slashes are vital!! This is for Windows. Mac paths may be different? dirPath="C:\\Users\\WINDOWS_USER_NAME\\FOLDER_NAME\\" ext="JPG" current_date = datetime.datetime.now().strftime ("%a-%d-%b-%Y-%H-%M-%S") # Are we dealing with a Poser scene? scene = poser.Scene() # First, we make the Ground invisible. Thus, no ground shadows or grid. # Comment out these two lines with #'s if you dont want this to happen. scene.SelectActor(scene.Actor("GROUND")) scene.Actor("GROUND").SetVisible(0) # Now we make sure the render is set to use a DPI of 300. # And switch the render engine to real-time PREVIEW mode. # This assumes the user has already set their preferred render size. scene.SetResolution(300, 0) scene.SetCurrentRenderEngine(poser.kRenderEngineCodePREVIEW) # Now delete all lights in the scene. Then redraw the Poser scene. lights = scene.Lights() for light in lights: light.Delete() scene.Draw() # Now turn on the 'Smooth Shaded' display mode. poser.ProcessCommand(1083) scene.Draw() # Now Toggle 'Depth Cue' display mode on. poser.ProcessCommand(1044) scene.Draw() # Figure style is now set to use document style, in case it wasn't for some reason. poser.ProcessCommand(1438) scene.Draw() # Set the scene's background color to white. scene.SetBackgroundColor (1.0,1.0,1.0) scene.Draw() # Now we render the scene in Preview at 300dpi and save to a .JPG file. # It must be .JPG otherwise we get a .PNG cutout with alpha and fringing. # The file is timestamped and saved into the user's preferred directory. scene.Render() scene.SaveImage(ext, dirPath + current_date) # Done. Now we revert the entire scene, to its last saved state. This means # that it doesn't matter that we changed the display modes and deleted lights. poser.ProcessCommand(7) |

Why do all this? Because AI image making can use depth-maps to recreate 3D scenes, in combination with the descriptive text prompt. AI + Poser’s content = accurate AI visuals of anything Poser has in its Library.

3D-GPT

3D-GPT. If E-on’s Vue was a ‘text -to- 3D landscape model’ AI…

Release: OpenPose for Poser – Poser to SD

Ken K has released OpenPose for Poser 12, which provides the first ‘Poser to Stable Diffusion’ pipeline.

The Video Demo suggests it’s useful for speeding up the feeding of SD with exact poses, and it looks especially useful for hands. While a Controlnet can provide native openpose estimation, the hands are often not well detected. Ken’s method lets you get excellent hands.

The free alternative might be something like a .BVH to OpenPose converter. But no-one seems to have made one, which seems rather amazing. Everyone wants to do it from analysed video pixels rather than the skeletons of 3D figures. So Ken’s new product seems unique.

Release: Cartoon Animator 5.2

Reallusion’s Cartoon Animator (formerly CrazyTalk Animator) has a new Motion Pilot feature. Seems to be a motion-damped mouse-cursor, so you can easily draw an editable motion path. But it has adjustable settings, as you can see here…

For full details see the Motion Pilot demo video.

ElevenLabs is out of beta

The leader in AI text-to-speech voice, Poland’s ElevenLabs, is now out of beta. Now supporting 28 languages in ‘Eleven Multilingual v2’. Can be paired with Professional Voice Cloning, which should mean that those with the correct intonations in the input can also get these in the output in another language. They also have a ‘Voice Styles’ library.

The newly added languages are…

Chinese, Korean, Dutch, Turkish, Swedish, Indonesian, Filipino, Japanese, Ukrainian, Greek, Czech, Finnish, Romanian, Danish, Bulgarian, Malay, Slovak, Croatian, Classic Arabic and Tamil.

The Pricing is nice, though no PayPal. Someone making a short 90 minute audiobook per month would need the $22 a month subscription, but the free and starter tiers are very reasonable. Especially so for those making short 12-20 minute animations with Poser and DAZ.

SuperFlying Dreamland for Poser

Poser could be the ‘killer app’ in creative AI, in terms of usable graphics production for storytelling.

Imagine an AI that takes what you see in the Poser viewport, and works on that, giving you 98% consistent character renders which would allow the creation of graphic novels etc.

The aim would be to keep all character details consistent and stable, while also ‘AI rendering’ the viewport into a consistent professional ‘art style’. Auto-analysis of a quick real-time render from the Viewport might be needed (already here, elsewhere) and auto-prompt construction (already here). Perhaps there might be some on-the-fly LoRA training going on too, behind the scenes. Then, the AI image generation would be done.

You can kind of do all this now, outside of Poser, using Poser renders. But what if it was all neatly integrated into Poser, and ran on SDXL? All those royalty-free runtime assets then become super-valuable, since with their aid you can easily get the AI to do exactly what you want. Face, expression, pose, clothes, camera-angle. Hands. All output by the AI to the usual masked .PNG file, ready to drop over a 2D backplate in Photoshop. In three clicks. And all consistent between images, enough to satisfy even the most fersnickety regular comics reader.

The aim would not be to go wild, but to get something very close to the arrangement and content seen in the real-time viewport. It doesn’t necessarily have to be done by Bondware/Reallusion either, since Poser is Python 3 friendly and very extensible. All that would be needed, perhaps, would be to open up PostFX to be able to run a Python plugin that applies its own FX on real-time renders.

So imagine Poser’s Comic Book Preview or Sketch rendering, but done by an AI on a purpose-built ‘AI-native’ PC (coming in 2025 in retail, if not before). With No Drawing Required™ and Character Consistency.™ Let’s call it SuperFlying Dreamland.™ 😉

Auto Effects

AI-powered auto sound-effects for a video. Finds… “the right sound-effects (SFX) to match moments in a video”. A task which can take a day or more of finding, for a long video. Plus the trimming, volume balancing, and slotting in to the video.

Of course it’s not going to do much for Tom & Jerry style animation SFX, compared to the real thing. But I guess for regular normal Powerpoint things (“that’s a dog, it barks, get bark-sound”) it could be useful for those making a ‘holiday-photos slideshow’ presentation.

And it may also save time for creatives. For instance this could interface in interesting ways with the giant Freesound library’s new simple taxonomy. (“That’s a dog, it barks, get links to top-12 bark sounds on Freesound”). That would save some time. Not so much a new ‘recommender’ system (they’re always dim-witted), but more a new creative ‘options bundler’ system. Likely to find a place within the emerging and more complex AI-powered script-flow workflow software.

CodeWhisperer

Possibly interesting for Python-coders who craft Python scripts for Poser, Vue, Blender, and others. The new Amazon CodeWhisperer, a free code-generator from Amazon, that appears to be genuinely free and supposedly “unlimited”. Although you do need an Amazon Web Services (AWS) account.

It’s powered by AI, of course. Be warned that the ‘free’ tier of AWS is only a 12 month trial, last I heard. Then you have to pay to keep the AWS account.

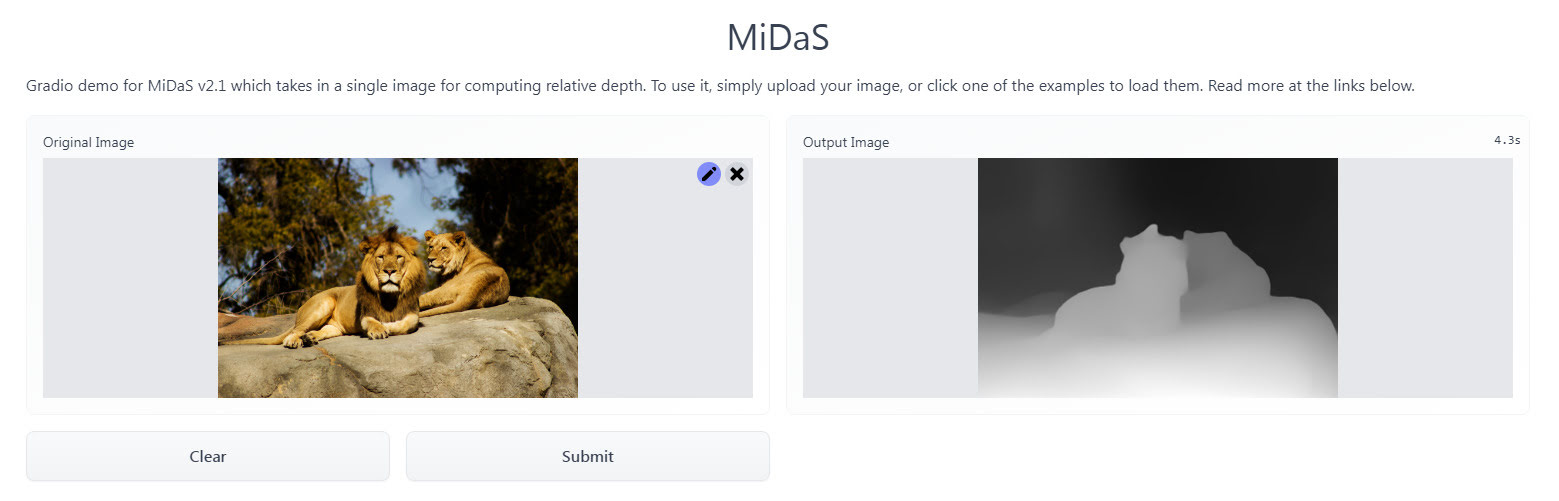

The MiDaS touch

MiDaS uses trained AI to take a normal 2D image and output a 3D depth-map. In Poser-speak it’s like Poser’s ‘auxiliary Z-depth’ pass or render.

Free and public, no sign-up needed. Just drag-and-drop your image. It can probably also be installed locally, though I haven’t looked at the requirements for that.

Once you have it you can use the usual Photoshop layer inversion/blending-mode tricks to create ‘depth-fog’ in the scene, where there was none before.

Dream Textures

Dream Textures is the Stable Diffusion AI, sending AI-gen textures direct to the Blender shader editor. Can be used with DreamStudio as the paid Cloud generator.

Since Poser does Python, I don’t see why something similar couldn’t be done for Poser. Doubtless there will soon be AI’s that can take a text-prompt and pop out a finished .PBR material. For example: “Make me a lava material that looks like glowing snake-skin”.

Text-based AI for mo-cap

Human Motion Diffusion Model is new text-based AI for generating mo-cap animation for a 3D figure. Still a science-paper + source code at present.

But it can’t be long before you type in a text description to generate a rigged and clothed 3D figure (plus some basic helmet-hair), and can then also generate a set of motions to apply to the figure’s .FBX export file. Useful for games makers needing lots of cheaply-made NPCs, provided they can be game-ready.

But for Poser and DAZ users, the ideal would be to have reliable ‘text to mo-cap’ exist as a module within the software. Even better would be to have an AI build you a custom bespoke AI-model by examining all the mo-cap in your runtime, thus gearing it precisely to the base figure type you intend to target.

YouTube – never click the annoying “See more…” again

A useful new UserScript for your Web browser. YouTube: expand description and long comments. No more clicking on “See more…”. With this installed, everything below the video is already open, and you just scroll down and cast your eye through it. Tested, and can be used alongside a UserScript that serves as a YouTube Comments Blocker.

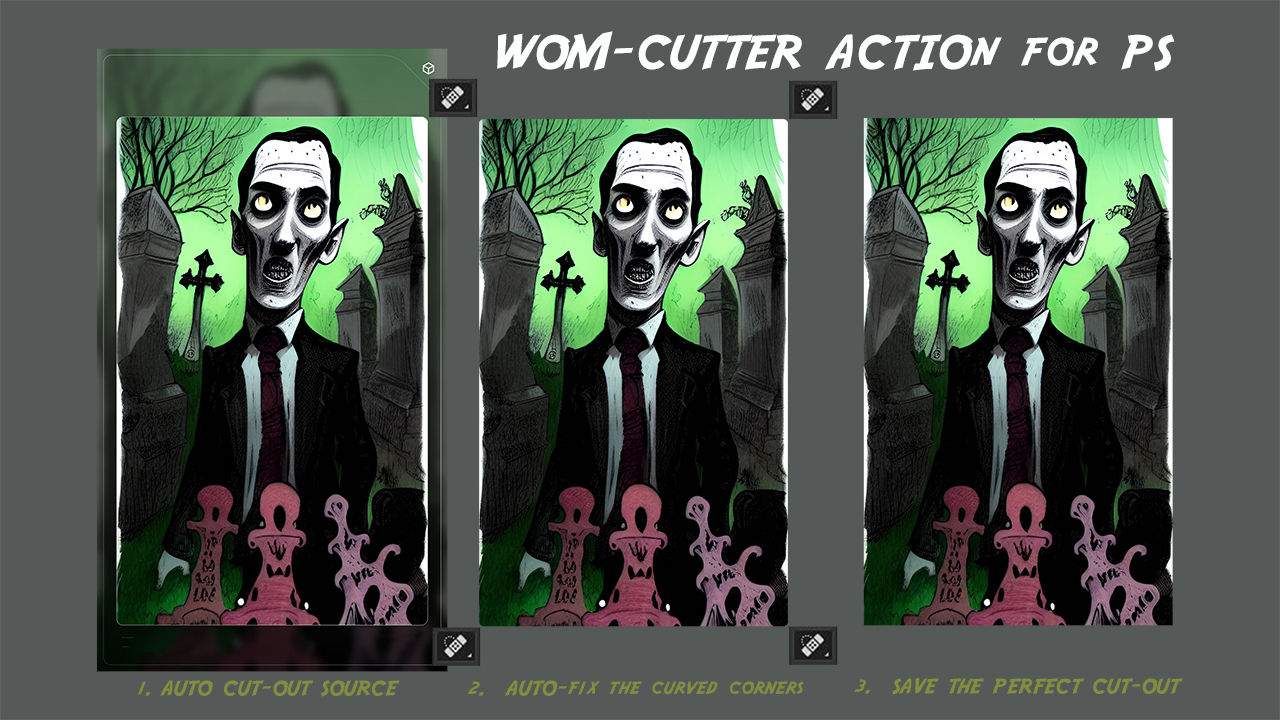

Photoshop Action: Cut-out ‘Dream by Wombo’ AI-pictures

Available now, my $2 Photoshop Action for users of the “Dream by Wombo” AI image generator. It very precisely and automatically cuts the picture out of a standard ‘Dream by Wombo’ AI-generated picture-card. It then auto-heals each of the tiny curved corners, and finally it saves the cut-out as a copy.

It works with the current Wombo output size available to free users. If that output size changes in future, then I’ll update the Action.