Pallaidium, claimed to be an AI ‘movie generator’ and editor, based around Blender. Requires CUDA 12.4, which in combination with Python means it’s effectively for Windows 10 or 11. Free, open source, and locally run on your PC.

Category Archives: The Animation Industry

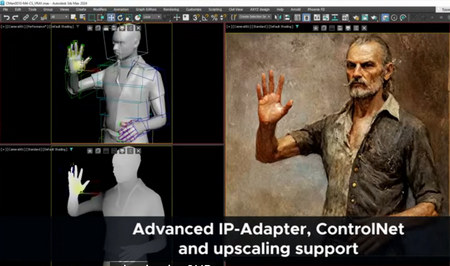

Stable Diffusion in 3DSMax

tyFlow 1.111 adds Stable Diffusion in 3DSMax, with its tyDiffusion module “matching simple guide geometry” and “depth” from the 3D scene, to make “detailed” generated images that closely follow the 3D scene. The SD UI being used is ComfyUI. SD 1.5 Models, LORAs and ControlNets are supported.

Interestingly, it appears to be free…

tyDiffusion is available in both the Free and Pro editions of tyFlow, with users of the Free edition getting support for GPU acceleration.

Requires 3ds Max 2018 or higher[tyFlow = “Max 2018”, but tyDiffusion = “Max 2023+”].

Yup, and on an open 435Mb download.

Theoretically then, one could take a Poser scene to Vue, then to Max, then ‘render’ it with AI. Though you can do something similar manually, rendering line-art and a depth map from a Poser scene and using them in ControlNet slots. Unless tyDiffusion is extracting some extra ‘secret sauce’ from the 3D scene, such as PoseNet poses from figures, or light maps for scene lighting.

However, I’m still hoping Renderosity has the sense to save Poser, by making a similar free and robust SD ‘rendering’ plugin for Poser 12 through 14.

ElevenLabs now generates sound FX

Leading audio generator ElevenLabs now offers an AI Text Prompt to Sound Effects Generator. Possibly useful if you can’t find what you need on the FreeSound website or similar. A 20 seconds limit, at present.

Beware of InsightFace

Beware of integrating any AI face-swopping technology from the open-source InsightFace developers. Because they appear to be getting very screwy on the legal side of things, for instance issuing several takedown notices for benign third-party YouTube videos… in which a well-known and respected YouTuber simply shows the technology. InsightFace’s products are thus not something you would want to use in your animations or graphic novels, I’d suggest, in case of legal problems with future licencing and distribution of your work.

‘Animation at Work’ 2023 Contest winners

Winners and videos for the Cartoon Animator software’s annual ‘Animation at Work’ 2023 Contest.

The winner in Nandor Toth, from Hungary…

Release: Cartoon Animator 5.2

Reallusion’s Cartoon Animator (formerly CrazyTalk Animator) has a new Motion Pilot feature. Seems to be a motion-damped mouse-cursor, so you can easily draw an editable motion path. But it has adjustable settings, as you can see here…

For full details see the Motion Pilot demo video.

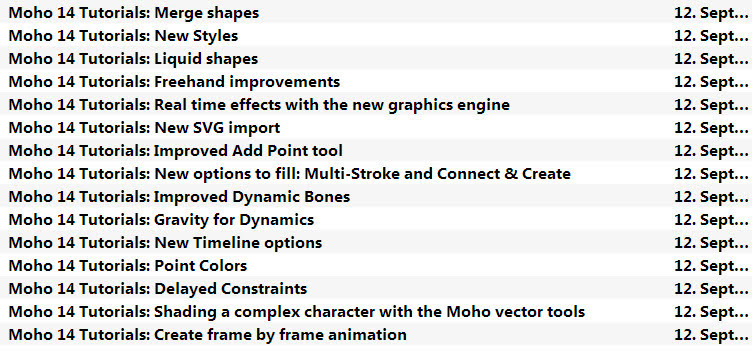

Release: Moho 14

Moho 14 is a big release for the 2D animation software (formerly Anime Studio). New graphics-engine, “near” real-time preview, new intuitive drawing tools for the new engine. Still no restored Poser import, but lots of other new features.

They also have a new 38-video beginner’s course YouTube Playlist.

Auto Effects

AI-powered auto sound-effects for a video. Finds… “the right sound-effects (SFX) to match moments in a video”. A task which can take a day or more of finding, for a long video. Plus the trimming, volume balancing, and slotting in to the video.

Of course it’s not going to do much for Tom & Jerry style animation SFX, compared to the real thing. But I guess for regular normal Powerpoint things (“that’s a dog, it barks, get bark-sound”) it could be useful for those making a ‘holiday-photos slideshow’ presentation.

And it may also save time for creatives. For instance this could interface in interesting ways with the giant Freesound library’s new simple taxonomy. (“That’s a dog, it barks, get links to top-12 bark sounds on Freesound”). That would save some time. Not so much a new ‘recommender’ system (they’re always dim-witted), but more a new creative ‘options bundler’ system. Likely to find a place within the emerging and more complex AI-powered script-flow workflow software.

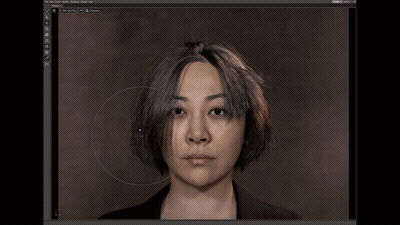

AI-driven hair movement

As good as dForce? “Helmet-hair” gone, forever? A new AI driven Hair Simulation on the GPU.

Animated demo:

Loops three times.

Click here to replay

Get your Mojo on…

AI boffins re-invent Pandromeda’s Mojoworld…

Our method enables simulating long flights through 3D landscapes, while maintaining global scene consistency – for instance, returning to the starting point yields the same view of the scene.

British Pronunciation in IPA, for Balabolka TTS

I made a 65,000 word Dictionary of British Pronunciation for the TTS freeware Balabolka, with pre-made IPA pronunciation tags alongside each word. It’s in Balabolka’s .BXT file format, which it can load and which can handle the IPA phoneme symbols.

Possibly useful for those using TTS for making clearly-voiced English tutorials or animations, using the British IVONA 2 voices, and who’re stuck on the pronunciation of a word that they can’t easily substitute. With this you can write freely, knowing that it’s unlikely you’ll have to substitute a dozen or more words with simpler or different forms that don’t quite express what you want to convey.

You can load it in Balabolka and then keep it on a tab in the background, for easy consultation. A good test is getting Ivona 2 Brian to say “mature” in a sentence. It’s very difficult unless you use the IPA coded tag.

For use with the abandonware British voices Ivona 2 Amy, Ivona 2 Ivy, Ivona 2 Emma & Brian. Neospeech Voiceware Bridget is also a very good ‘posh’ British voice, though after install will wrongly show up as ‘United States’ in the list of voice names. Most of the time these do a good job on their own, but sometimes you may need more precision — especially for short comedy animation — and the IPA tags give you that.

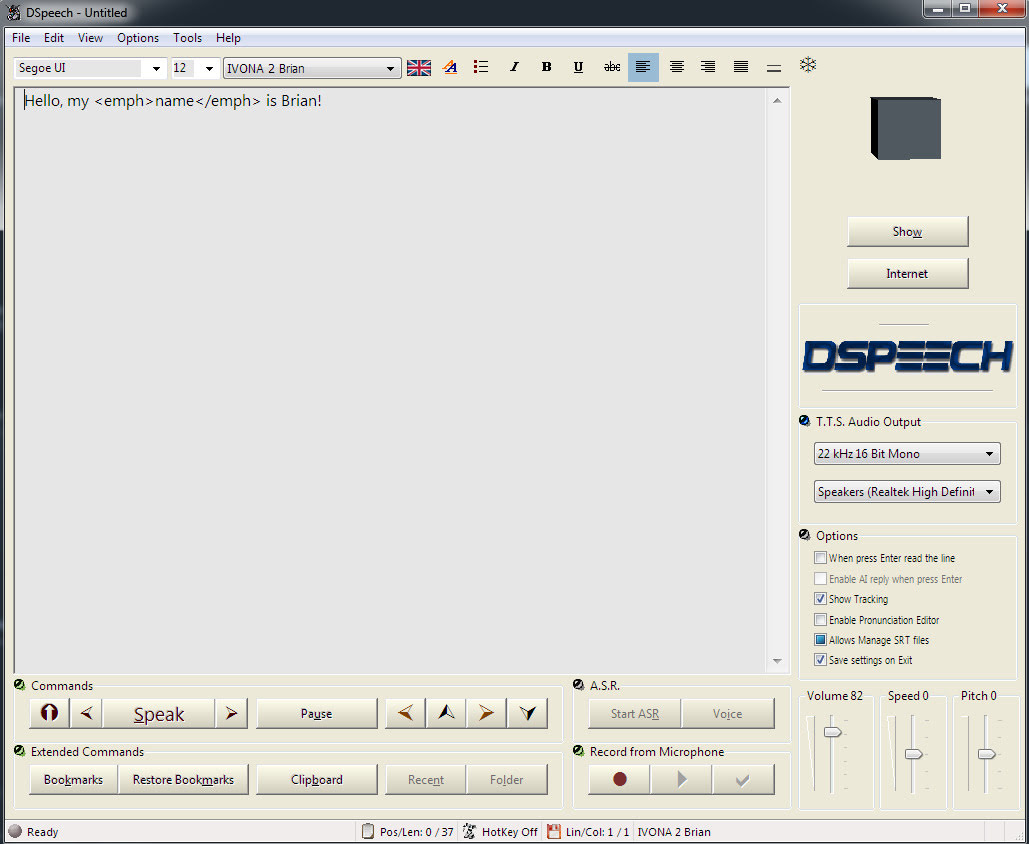

DSpeech on test against Balabolka

It seems rather odd to consider old-school text-to-speech software and SAPI5 voices, at a time when Poland’s ElevenLabs is doing such great things with AI-generated voices. But I’m always one to cherish old Windows freeware, and at present all the new AI voices are online and require a monthly/yearly subscription. So I was pleased to find an alternative freeware to Balbolka for desktop PC text-to-speech using SAPI5 voices. Many such voices are also now abandonware on Archive.org, the key companies having since been sold on several times.

Made in Italy, the DSpeech TTS freeware used to be fairly basic, but it’s improved enormously since about 2016. Though this is not a fact reflected in its rather basic 1990s-style download page, which you’ll have to overlook. This freeware is now in version 1.74 (spring 2022), It’s genuine one-man freeware, made in Italy, and is feature-comparable with Balbolka though a bit rougher in UI and Help translation to English.

The DSpeech download link uses only a .GIF button, so if you have a .GIF blocker in your Web browser, then you instead right-click the page and ‘View Source’. You should then see a live working link to the download in the HTML…

The English manual is included in the software. There’s no Windows installer, just unzip where you want and run it.

SCRIPTING: Beyond the usual control tags, DSpeech supports basic scripting including voice-recognition and script loops. Which is unusual. Apparently it can even read out VLCplayer movie sub-titles in real-time, in a chosen SAPI5 TTS voice and speed.

TAGS: The tagging menus make switching voices easy. There’s better right-click support than in the latest Balabolka for adding tags, though that’s not saying much. When you highlight a word in DSpeech, and add a tag, the word is not wrapped with a closing and opening tag, it’s deleted. Urgh! Having right-click is great, but… the rest of the tag insertion system is not good.

LOQUENDO: DSpeech is supposed to support Loquendo ‘voice expressions’ (laugh, sigh etc) via the Italian Loquendo 6 Italian ‘Paola’ and ‘Luca’ TTS voices, combining words with special expressive tags such as \_Laugh and suchlike. Later the tag syntax was changed to \item=Laugh in Loquendo version 7 voices. But while these v6 voices work fine in any DSpeech, and v7 voices work fine in DSpeech v.1.72.29 (December 2018, not the latest 1.74.x), their expressive cues no longer vocalise in DSpeech. You just hear silence.

Spanish Loquendo 7 voices (not 6) can however ‘express’ when used in Loquendo’s own Java-based TTS Director, which came with the Loquendo SDK. See YouTube for examples and useful links.

Regrettably neither the Loquendo 6 or 7 voices can even be played in the other TTS freeware Balabolka, though they do show up on its voice menu. It thus seems that properly-working Loquendo voices are limited to…

* Loquendo 6 (any voice) on DSpeech 1.74.x or earlier. Loquendo 7 not supported on the latest DSpeech.

* Loquendo 7 (Spanish) on DSpeech 1.72.29 (or earlier?), or Loquendo 7 (Spanish) on Loquendo TTS Director with SDK and Spanish pack.

The Spanish version 7 voices do however have ‘expressives’ that work fine with Loquendo TTS Director 7, which was Windows freeware which shipped with the developer/API/SDK kit. This success at least showed me that the problem was not with my PC or a 32-bit / 64-bit Windows clash, at least for version 7 voices.

Yet it’s strange. Obviously DSpeech could, at one time, play the ‘expressives’ in the Loquendo 6 voices. But, no longer, it seems. Switching back to an older DSpeech 1.72.29 didn’t cure the problem, but it did usefully fix the playing of the Loquendo 7 voices. I suspect that Loquendo 6 voices now have a 32-bit / 64-bit problem on 64-bit Windows, despite the player and voices both being 32-bit.

Loquendo TTS Director voices have a complete list of expressives in the C:\Program Files (x86)\Loquendo\LTTS7\data\voices\Soledad\SoledadGildedParalinguistics.sde file (change name for each voice). Open it in Notepad++ to see the list in plain-text. For instance, Soledad has the following, and obviously you can also mix and match and tone-shift…

\item=Ah

\item=Ah_01

\item=Ah_02

\item=Ay

\item=Ay_01

\item=Breath

\item=Breath_01

\item=Buh

\item=Buh_01

\item=Buuu

\item=Buuu_01

\item=Cataplum

\item=Cataplum_01

\item=Click

\item=Click_01

\item=Click_02

\item=Cough

\item=Cough_01

\item=Cough_02

\item=Cry

\item=Cry_01

\item=Cry_02

\item=Cry_03

\item=Ehm

\item=Ehm_01

\item=Epa

\item=Epa_01

\item=Hey

\item=Hey_01

\item=Hiccup

\item=Hiccup_01

\item=Hiccup_02

\item=Laugh

\item=Laugh_01

\item=Laugh_02

\item=Mhmm

\item=Mhmm_01

\item=Mhmm_02

\item=Mhmm_03

\item=Oh

\item=Oh_01

\item=Ohoh

\item=Ohoh_01

\item=Ops

\item=Ops_01

\item=Prrr

\item=Prrr_01

\item=Shhh

\item=Shhh_01

\item=Sigh

\item=Sigh_01

\item=Sigh_02

\item=Singing

\item=Singing_01

\item=Smack

\item=Smack_01

\item=Smack_02

\item=Sniff

\item=Sniff_01

\item=Sniff_02

\item=Sniff_03

\item=Snore

\item=Snore_01

\item=Swallow

\item=Swallow_01

\item=Throat

\item=Throat_01

\item=Throat_02

\item=Throat_03

\item=Uff

\item=Uff_01

\item=Ups

\item=Ups_01

\item=Whistle

\item=Whistle_01

\item=Whistle_02

\item=Whistle_03

\item=Whistle_04

\item=Whistle_05

\item=Yawn

\item=Yawn_01

\item=Yawn_02

\item=Yeee

\item=Yeee_01

\item=Yuhu

\item=Yuhu_01

\item=Yuhu_02

\item=Zas

\item=Zas_01

Easier to just paste these all in and cut out what you don’t want. Rather than wrestling with menu-based insertion.

VOICEWARE: DSpeech has support for reading with a VoiceWare TTS, but not for a vital aspect of the voice. The first version of the TTS VoiceWare voices (e.g. VW Bridget, British) had different inflections on words if you added ! ?! or !? (again, see YouTube for demo and useful links). But this feature of the voice is not supported in DSpeech. It is supported in Balabolka. So this is another deal-breaker for DSpeech.

CONCLUSIONS: Despite what at first glance seems to be DSpeech’s more intuitive right-click tag adding, Balabolka is on several counts the superior tool for longer-form editing. It properly wraps highlighted words in starting/closing tags, which is vital if you’re TTS-coding something longer than a paragraph. It also supports VoiceWare’s ! ?! and !?, useful for one of the best British voices.

I thus suggest using the latest Balabolka for freeware TTS scripting and recording, and the old Loquendo TTS Director + its Spanish voices for creation of vocal FX, pitch and speed-shifted to match the voice being used in Balabolka. Then embed these vocal FX as audio clips in Balabolka. This is not as ideal as having Balabolka support Loquendo (it refuses to even read their voices), but it’s a viable workaround.

The ideal would be to have a standard SAPI5 voice that was ‘expressives only’, for use in Balabolka. A sort of audio FX bank, that could be reliably called with a simple tag (such as \_sneeze etc). But so far as I can see, that doesn’t exist, other than by chopping bits from my Dictionary of British Pronunciation for TTS.

Finally, note that TTS Director only ‘sees’ its own Loquendo voices, and is therefore no good as a general SAPI5 TTS script editor. TTS can be done in Adode Captivate (used for super-Powerpoint ‘e-learning’ creation) and in CrazyTalk / Cartoon Animator, but the editing is not at all comparable to Balaboka.

Daz to Blender Bridge updated and fixed

Butaixianran has kindly created a free DazToBlender: Daz to Blender Bridge updated fork…

“I updated the official Daz To Blender Bridge, now Daz model can be exported from Blender with morphs and textures, so you can use Blender as a Daz Bridge to other 3D tools.”

It’s already had a number of bug-fixes, and animation import has been added. Normal maps can be saved to .JPG to reduce bloat. Also supports Genesis 8.1 and 9.

No texture or base mesh resolution changes are involved with the conversion, and the user is left to do that in Blender. Or just use Blender as a pass-through to other software. As always, geografts and complex geoshell and similar overlay things many not convert well.

Regrettably Blender 3.1 or higher is required, so you need a powerful enough PC to pass Blender 3.x’s “install or not?” test and get Blender to install. Update: Blender 3.51 for Windows 7 (early May 2023). Needing no installer, it will now launch on Windows 7! Hurrah.

Made with Blender – the new movie showcase website

Blend.Stream, a new showcase and aggregator site for all movies made with Blender. This means more than just the official open movies sponsored by the Blender Foundation, and the site is open to all quality films made with the software. Also keep in mind that they’re not all under Creative Commons, though they are all free to view.

Heroes of Bronze

The short film Heroes of Bronze has a release date and teaser-trailer with the date.

Read a long interview with maker Martin Klekner in the recent “Warriors” themed issue of Digital Art Live magazine (#71, August 2022).