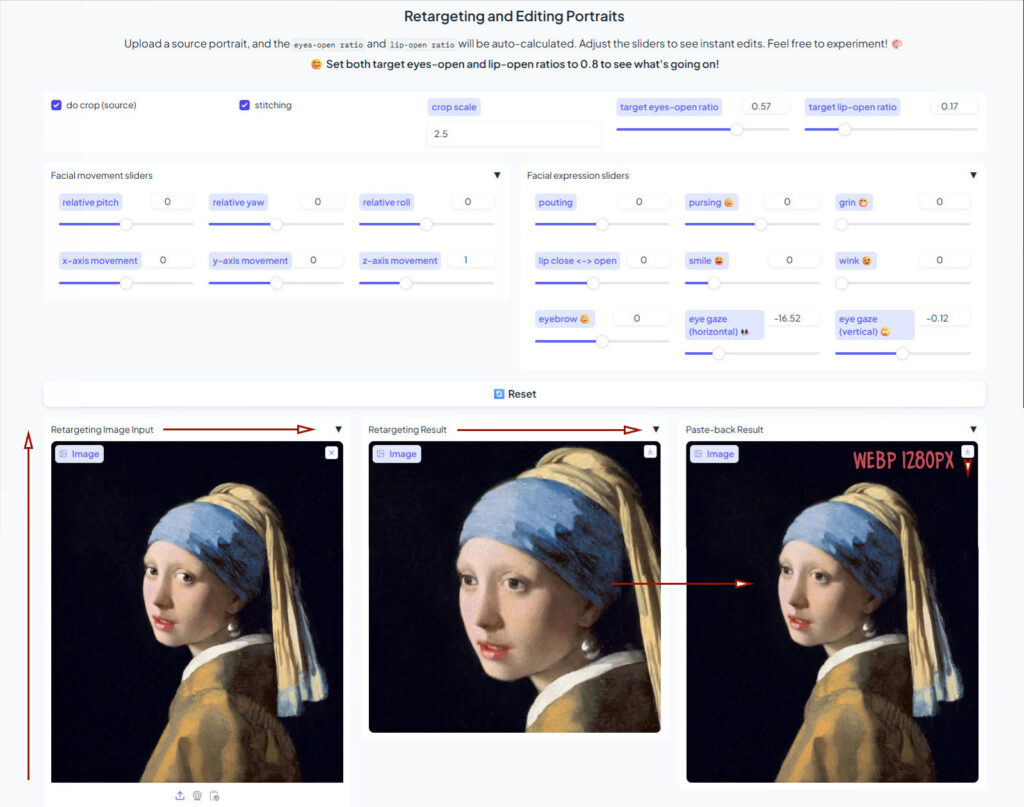

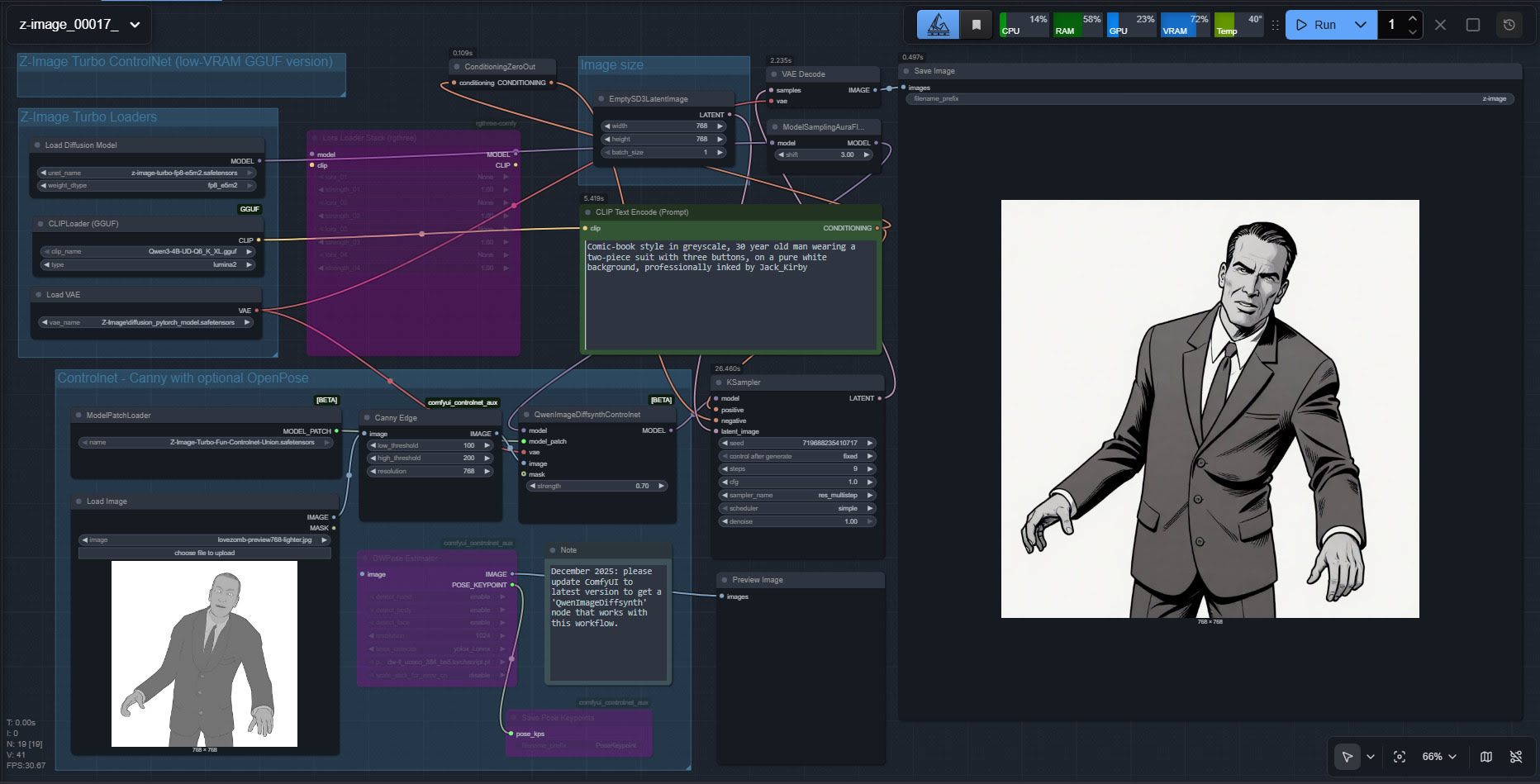

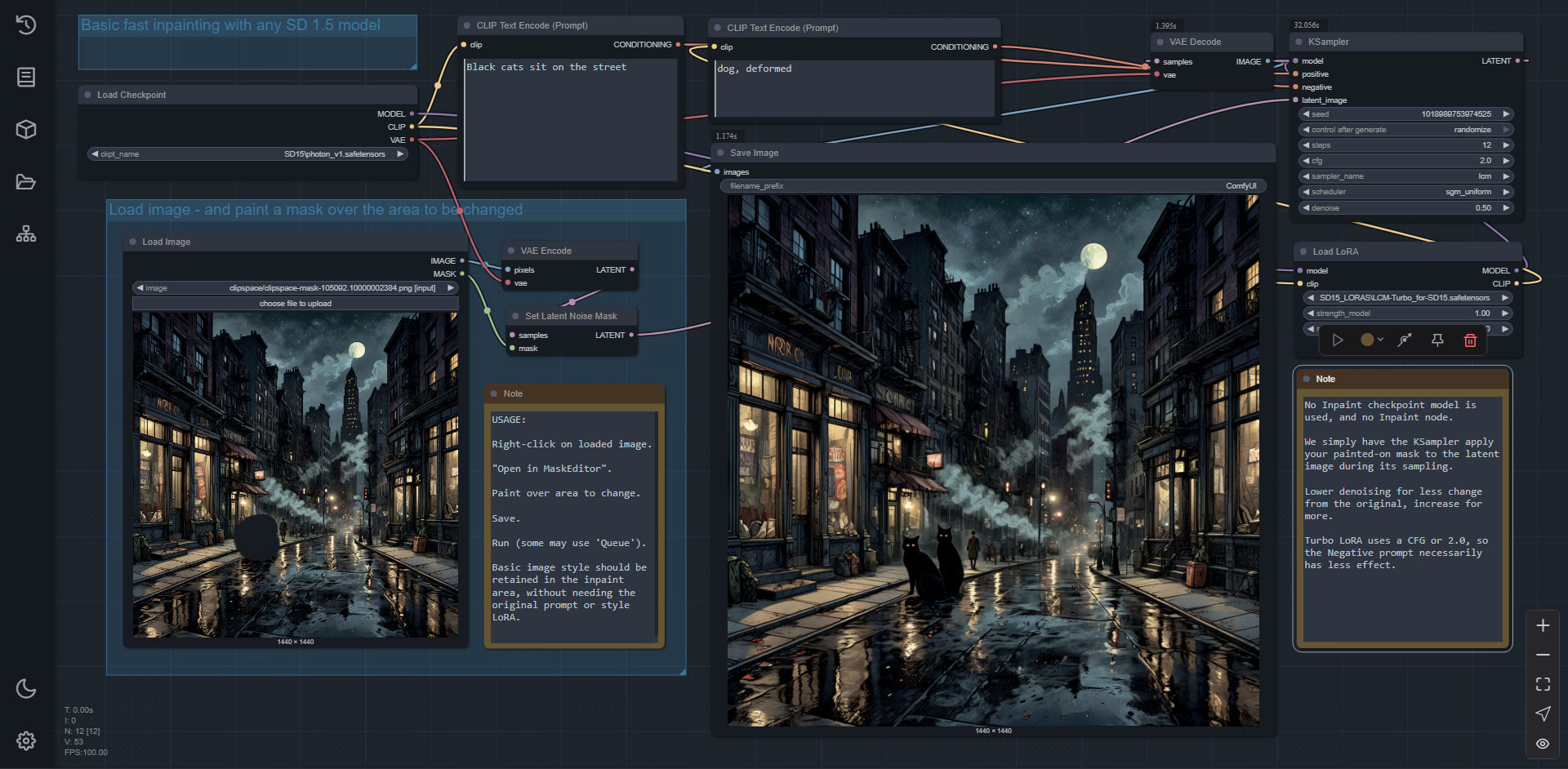

An update to yesterday’s post on the LivePortrait standalone/portable for Windows. The poor quality and different-sized .WebP output spurred me to get it working in ComfyUI. And hurrah, I now have a simple Liveportrait expression/gaze-direction changer working in ComfyUI. With .PNG output of the same size as the input.

The controls are not so intuitive as in the portable, so I’ve added a note explaining what the settings do.

Models are already in the portable, if you downloaded that yesterday after seeing my post. Just copy them over to their relevant ComfyUI ../models/liveportrait sub-folders.

You then only need ComfyUI, these custom nodes, and the extra face detection models linked to there.

Slightly slower than the portable, four seconds rather than one, but then there’s no low-grade .WebP in the mix. The above workflow should (theoretically) also work on Macs and Linux.

Update: I hear there is now a newer alternative, China’s HunyuanPortrait, but it takes far longer, needs a powerful graphics-card, and is said to lack ‘human-ness’ in the output.