The December 2012 Literary & Linguistic Computing has a new paper on enhancing the traditional scholarly article, “Toward Modeling the Social Edition: An Approach to Understanding the Electronic Scholarly Edition in the Context of New and Emerging Social Media” (its annotated bibliographies are free)…

“This article explores building blocks in extant and emerging social media toward the possibilities they offer to the scholarly edition in electronic form, positing that we are witnessing the nascent stages of a new ‘social’ edition existing at the intersection of social media and digital editing.”

The five practical possibilities noted in the paper are:

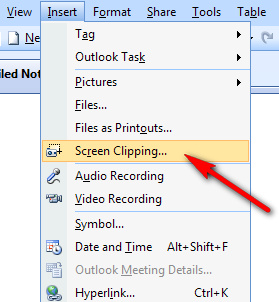

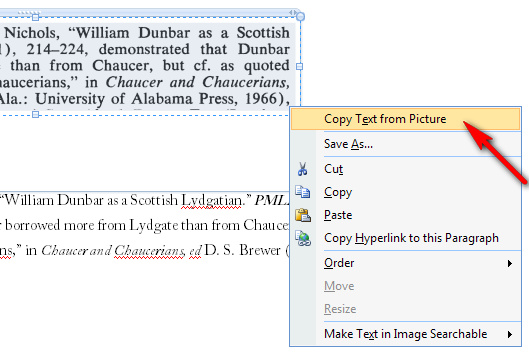

* Collaborative Annotation (via third-party browser toolbar addons).

* User-derived Content (user-made linked ‘overlay’ collections).

* Folksonomy Tagging (freeform keyword tagging, open to all).

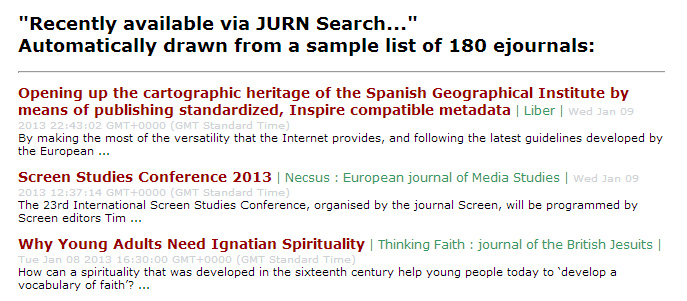

* Community Bibliography (using Zotero et al).

* Community Text-Analysis (via tools yet to be created).

That seems a sensible ‘overlay’ approach. Bolting the current social media fad-of-the-year right into the heart of a text would be asking for problems. No-one opening a document in the year 2040 will want to find that all of the document’s embedded Twitter hooks are broken, and thus they face a sea of broken pop-ups and sidebars (because in 2040 a short comment is perhaps sent simply by thinking it via a digi-telepathic implant, whereupon it gets sent to someone’s augmented-reality contact-lens complete with a beautiful little overlay cloud of colour-shaded emotional nuances…).

Adding a light digital patina of polite academic chatter and margin notes assumes that the author keeps basic control of the core text. But what if we do not remain safely within the polite scholarly culture of abstracting short quotes for ‘fair use’? What if we enter into a world of open remixing, perhaps via state mandated CC-BY licences? On certain platforms we’re already in a situation where the core digital text only appears to have a pure and elegant print-like form, while a “view source” operation shows the author’s text is held in a cat’s-cradle of structured data and code. What if future advances in such structuring/tagging mean that the text can be swiftly abstracted / summarised / remixed (probably with the aid of a cloud of automated software bots) or even rewritten? The core text would thus not simply be overlaid with a friendly social chatter. It would be opened to being policed in depth. For instance, we might imagine an efficient commercial fact-checking service (also bot-enhanced), operating in much the same way as the plagiarism bots that already patrol student essays in some universities. Hopefully such policing might be benign and useful, but it some cases it might not be. One might imagine a “Great Firewall of China” which rather than blocking a text actively rewrites it on-the-fly (in much the same way as Google Chrome currently auto-translates Chinese to English).

In such circumstances scholars might find some comfort in thinking that their work is wrapped up in the tough rhino-like hide of the reliably printable PDF. But I suspect that even our PDF silos will in time be understood as just transitory arrangements. I suspect our legacy PDF silos will eventually be gobbled up by some complex OCR-based conversion and semantic coding autobot, which will elegantly and faithfully convert them into an advanced structured form — from which we can then easily abstract and remix and rewrite them in increasingly complex ways.